![]()

You’ve seen it on TV.

A police forensic computer scientist stares at a computer screen full of thousands of ones and zeros and states, “Oh, this guy is good!”

This leaves you wondering, why all the ones and zeros?

Is this how computers really work?

Why Do Computers Use Binary?

Computers use binary because it’s the simplest method for counting available and is how a computer codes everything from memory to HD video streaming

Binary allows for a computer to process millions of inputs very quickly.

With binary, there are only two options, on or off.

Computers communicate by stringing a series of ons and offs into complex groups which tell the computer what it is supposed to do.

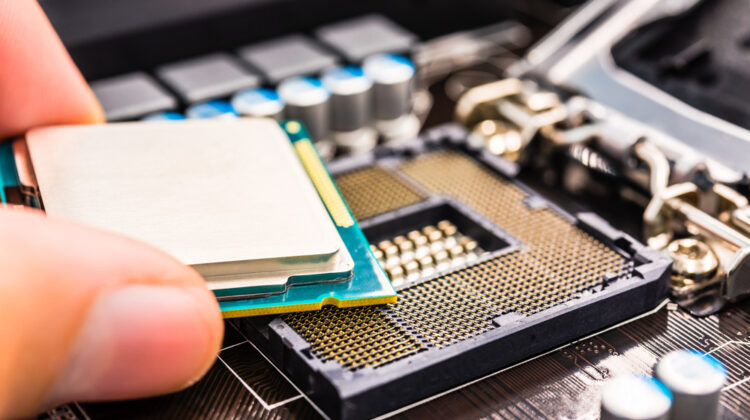

To achieve this, computer systems use a series of switches and electrical signals to understand what it is supposed to do.

Think of a standard light switch, there are two options for the switch: on and off.

Computers rely on this same concept and use transistors as electrical switches.

The transistor is either switched on or off through an electrical signal.

The computer reads the on/off signals to create the desired output or complete the programmed function.

What Is Binary?

Binary is a system of counting which is based on a base-2 numeral system that only allows for a 2-digit option, 0 and 1, where 0 represents off and 1 represents on.

Counting in binary works the same way as counting in base-10 or the decimal system, which is what we use.

For example, with base-10, there are 10 possible options for a place holder, 0 through 9.

If you want to express the number 9, you simply write 9 because the number in the place holder can be up to 9.

Each place holder increases in multiples of 10.

If you want to express the number 10 you must add a digit, 1, to the first digit since the first digit can only be 0 through 9.

This leaves you with 10.

To understand binary using your understanding of the decimal system, think of the number 10 in decimal by saying yes or no to the placeholder.

If there is a 1 in the 10’s place holder, that means that yes, there is one 10.

If there is a 0 in the one’s placeholder, that means that there are no ones, leaving you with 10.

One ten plus zero ones equals 10.

Binary works the same way but with only 0 or 1 as the option for each place holder.

Each place holder is an exponent of 2.

For example, to write the number 1 in binary, you simply write 1 since the place holder can be either 0 or 1.

To write the number 2, you will have to add another placeholder digit, leaving you with 1 0 in binary.

(Note, when reading binary, read everything as one or zero, meaning 1 0 would be read one zero).

Once again, think of it in terms of yes and no.

Yes, there is one 2 (the first digit in binary 1 0) and no there are 0 ones (the second digit in binary 1 0).

This leaves you with yes 1 two and no ones, which equals 2.

Since each digit can only be a zero or one, the placeholders increase by exponents of 2, which are 128, 64, 32, 16, 8, 4, 2, and 1.

The binary number 10010110 would be yes to 128, no to 64, no to 32, yes to 16, no to 8, yes to 4, yes to 2, and no to 1.

Add these together and you get 128+16+4+2=150.

| 128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 |

| 1 | 0 | 0 | 1 | 0 | 1 | 1 | 0 |

| Yes | No | No | Yes | No | Yes | Yes | No |

128 + 16 + 4 + 2 = 150

Each digit, either 0 or 1, is called a bit and a series of eight bits strung together is a byte.

Why Do Computers Use Binary Instead Of Base-10?

Computers use binary instead of base-10 for simplicity.

A computer does not understand human logic, which is needed to grasp the concept of a base-10 or decimal system.

Computers use transistors or small electrical switches to communicate.

A switch is easy for a computer to understand.

The electrical current can either trigger the switch to the on position or leave the switch as it is in the off position.

With binary, there are only these two options of on and off, making it easy for the computer to understand.

A base-10 system is much more complicated with each place holder having 10 possible values.

For a computer to understand such a system, the electrical current going into the transistor would have to be slightly different for each of the 10 possible values.

These minute changes in electrical current would have to be extremely precise and would be very difficult to get to always function properly in all environments.

There is another system of counting which can be used for computing which is the ternary system that allows for three possible values for each place holder.

This computing method, however, never gained traction in the computing world due to the increased level of difficulty in controlling voltage into the transistors and the fact that all binary systems would have to be completely reworked to use this method.

Binary remains the basis of computing due to its simplicity and speed of computing.

Do Smartphones Use Binary?

Yes, smartphones use binary to communicate.

With older traditional cell phone technology, the audio signal was sent via radio wave through the receiving tower to the specified device.

The radio wave was sent as it was received, as a radio wave frequency signal.

With smartphones, rather than sending the original radio frequency to the receiving tower, the device converts the signal into a system of 1s and 0s, or binary, to increase the amount of data that can be sent and the speed at which it is sent.

By converting to binary, a smartphone can send not only audio, but files, pictures, and videos as well.

How Is Text Represented In Binary?

Text is represented in binary by mapping a specific character to a specific binary number using a specific encoding method.

I know that definition sounds very vague and complicated, but stay with me and we will break it down.

The first thing to understand is that, while you are most likely reading this article in the English language, a computer that only communicates through binary code has no idea what the English language is.

The computer only knows that if you press the “a” button on your keyboard, it has been programmed to equate that specific button with a specific binary number.

It stores this binary number and, when called upon to regurgitate the character represented by the button pressed, knows that that specific binary number equals the letter “a.”

This mapping of specific binary numbers to specific characters holds true for all characters available in the given language including symbols such as !, +, or even a blank space by pressing the space bar.

But wait! If the computer does not understand the English language, how does it know to print an English character of “a?”

This is where the encoding method comes into play.

A computer, as previously stated, has no idea what the English language is.

For the computer to map a specific binary number to a specific character, it has to be told which character set it should use for the mapping.

For example, the computer can be told to use the ASCII character set as its encoding method.

This lets the computer know that it will map a specific character, in this case, “a,” to the corresponding binary number value as defined by the ASCII map.

Different regions and languages have their own unique character maps for this binary conversion such as KS X 1001:1992 for South Korea or KPS 9566 for North Korea.

Is There A Universal Binary Code For Text?

Yes, there is a universal binary code for text, and it is called Unicode.

Before the massive international reach of the internet, each region and language had its own set of encoding rules for text.

This lack of cohesion was not an issue initially because most computing was done within the scope of one’s own language parameters.

Computers in the United States had no problem speaking and sharing files with other computers in the United States.

However, the internet opened dialogue between countries and continents, leading to the need for a global text standard.

Remember that a computer only communicates in 1s and 0s, so if a file from Korea was coded using the Korean standard and then sent to the United States to be read, the computer in the United States would take the 1s and 0s sent by the Korean computer and try and map those 1s and 0s to the English language map.

Obviously, this would result in pure gibberish since the Korean language does not even use letters.

One way to combat this confusion would be to change the character map the computer was to use to decode the file to the appropriate character map.

This process can be highly complicated, however, especially when dealing with communications for multiple languages.

Enter Unicode.

Unicode is an international standard for text representation through binary which recognizes 143, 859 characters in 154 modern and historical scripts (including Klingon!), along with symbols, emojis, and more.

Unicode was developed to bridge the gap between character encoding maps to create a universal character map that can be used by all countries and regions to communicate effectively with one another.

How Are Images Represented In Binary?

Images are represented in binary by assigning a binary value to a pixel.

Multitudes of pixels are placed next to one another in a grid pattern to represent an image.

Just like converting text to binary, other information must also be placed into the computer so that the computer knows how many pixels to place together and what color value range will be used in the image.

This information is recorded as the image’s metadata.

The creation of a black and white image is quite simple with each pixel assigned a binary value of either 0 for white or 1 for black.

To create color, more bits or numbers must be added to the binary number.

For example, a two-bit binary color would be 00 for white, 01 for blue, 10 for green, and 11 for red.

The more bits you add to the binary number, the more colors you can achieve.

Today, the RGB or Red-Green-Blue color scale is most commonly used for color representation.

This is a 24-bit number with the first 8 bits representing how much red is in the color, the second 8 bits representing how much green is in the color, and the final 8 bits representing how much blue is in the color.

By mixing these values, 16,777,216 possible colors can be represented.

How Is Audio Represented In Binary?

Sound is created by waves.

Each wave contains two distinct factors: amplitude and frequency.

The amplitude measures how loud or soft the sound is while the frequency indicates the pitch of the sound.

For a computer to understand and replay a sound wave, it must first be converted into voltage, usually using a recording device such as a microphone.

Once the sound waves are converted into voltage changes, binary samples can be taken through the use of an Analog-to-Digital Converter, or ADC.

An ADC turns voltage into a binary number by taking samples of the wave at regular intervals.

Each sample of the voltage change is recorded in binary.

To replay the recorded audio, the computer uses a Digital-to-Analog Converter.

The DAC turns the saved binary sample data into the correct voltage for each sample, which passes through an amplifier and triggers vibrations in the listening device, such as speakers or headphones, at the specified voltage to create sound.

The voltage changes are producing different frequencies and amplitudes.

When all the samples are played in conjunction with one another, the result is a continuous stream of sound or audio.

The quality of the audio recording is dependent upon the sample rate and the bit rate.

Sample Rate

The sample rate is how many samples are taken in a second and is measured in Hertz or Hz.

The higher the sample rate, the more precise the audio recording.

For example, imagine you are told to listen to and record a person telling a story, but you are only allowed to record every 10th word.

When you go to play the recording of the story, much of the story is lost due to only every 10th word being recorded.

As you increase the number of words you are allowed to record from the story, the playback of the story becomes clearer and clearer until all the words are recorded, resulting in a seamless story.

The same principle holds true for the sample rate.

The more samples per second that the computer records, the higher the quality of the audio playback.

A standard sampling rate for audio files is 44.1 kHz or 44,100 samples per second.

Bit Rate

The number of bits used for each sample of the sound wave is referred to as the bit rate.

The higher the bit rate, the higher the quality of the audio.

For example, if the bit rate for an audio file is 2 bits, there are only four possible values that can be recorded for the sound.

This would obviously not result in a very precise sound value.

However, the more bits that are added to the bit rate, the more precise the sampling will be.

Think of audio sampling the same way you think of color sampling.

If you are shown a photo with numerous shades of color and are told to recreate the image but you can only use white, red, green, and blue, the resulting image may resemble the image but will not even be close to perfectly recreating the image.

The same principle holds true for audio samples.

If a computer is told it can only use 2 bits to represent a sound, it will attempt to pick the closest representation it can but will lose a lot in the process.

A standard bit rate is 16 bits, which results in 65,536 possible values for the sound.

References:

“Binary and Hexadecimal Numbers Explained for Developers.” TheServerSide.Com, https://www.theserverside.com/tip/Binary-and-hexadecimal-numbers-explained-for-developers. Accessed 22 Mar. 2022.

Colors and Images. https://cs.wellesley.edu/~cs110/reading/colors-and-images.html. Accessed 22 Mar. 2022.

How Things Work: Cell Phones – The Tartan. http://thetartan.org/2010/9/27/scitech/cellphone. Accessed 22 Mar. 2022.

McCallum, Jacob. Understanding How Binary Code Get Converted to Text. 14 Sept. 2020, https://www.nerdynaut.com/understanding-how-binary-code-get-converted-to-text.

Sound Representation | Binary Representation of Sound. 5 Feb. 2018, https://teachcomputerscience.com/sound-representation/.

“The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets (No Excuses!).” Joel on Software, 8 Oct. 2003, https://www.joelonsoftware.com/2003/10/08/the-absolute-minimum-every-software-developer-absolutely-positively-must-know-about-unicode-and-character-sets-no-excuses/.

NEXT: Will Any Monitor Work With Any Computer? (Explained)